Building a robust and accurate model in machine learning doesn’t end with just choosing the correct algorithm. A critical aspect that often determines how well your model performs is hyperparameter optimisation. Hyperparameters are the settings or configurations external to the model that cannot be learned from data directly, and fine-tuning them can significantly improve performance.

If you’re pursuing a Data Science Course, mastering hyperparameter tuning is essential to move beyond basic modelling and towards producing efficient, production-ready machine learning systems. This blog will guide you through what hyperparameters are, why they matter, and various techniques to optimise them effectively.

What Are Hyperparameters?

Before diving into optimisation, it’s important to understand hyperparameters. In contrast to parameters (which the model learns from the data, such as weights in linear regression), hyperparameters are set before the learning process begins.

Some examples include:

- Learning rate in gradient descent

- Number of trees in a random forest

- Number of hidden layers and neurons in a neural network

- Regularisation parameters like L1/L2

- Kernel type in SVMs

Selecting the right combination of hyperparameters can lead to better accuracy, generalisation, and computational efficiency.

Why Hyperparameter Tuning Matters?

Even the best algorithm will fail to perform if the hyperparameters are set poorly. For instance, a high learning rate might make your model skip over the optimal solution, while a low rate can slow training.

Here’s what optimised hyperparameters can bring to the table:

- Improved Accuracy: Helps reduce both bias and variance.

- Faster Training: Proper settings reduce the number of iterations needed.

- Better Generalisation: Avoids overfitting or underfitting.

- Model Interpretability: Particularly in simpler models like decision trees.

Popular Techniques for Hyperparameter Optimisation

There are several approaches to optimise hyperparameters, each with pros and cons.

- Manual Search

This involves changing one or more hyperparameters by hand and observing the result. While it’s a good way to understand how different hyperparameters affect the model, it’s not scalable or efficient.

Pros:

- Easy to understand

- Useful for gaining intuition

Cons:

- Time-consuming

- Subjective and prone to human error

- Grid Search

This method creates a “grid” of all possible combinations of specified hyperparameter values and evaluates the model performance for each combination using cross-validation.

Pros:

- Exhaustive and thorough

- Easy to parallelise

Cons:

- Computationally expensive

- Inefficient for large search spaces

- Random Search

Instead of trying every possible combination, this method randomly selects combinations of hyperparameters and evaluates them.

Pros:

- Often finds reasonable solutions faster than grid search

- Better for high-dimensional spaces

Cons:

- May miss the optimal set

- Still computationally heavy with large datasets

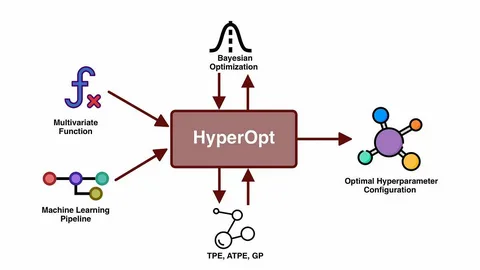

- Bayesian Optimisation

Bayesian optimisation uses probability to model the performance of the hyperparameters and updates this model as it evaluates more combinations. Based on past results, it intelligently chooses the next set of hyperparameters to try.

Pros:

- More efficient than grid or random search

- Learns from previous attempts

Cons:

- More complex to implement

- Requires more computational overhead for the optimisation process itself

- Genetic Algorithms

Inspired by natural selection, this approach starts with a population of hyperparameter combinations, evolves them using mutation and crossover, and selects the fittest individuals.

Pros:

- Suitable for complex, non-linear search spaces

- Can avoid local minima

Cons:

- Computationally intensive

- Requires fine-tuning of its parameters

- Automated Machine Learning (AutoML)

Tools like Google AutoML, Auto-sklearn, and H2O AutoML automate model selection and hyperparameter tuning. They combine multiple optimisation techniques and work well out of the box.

Pros:

- Fully automated

- Can handle large datasets and complex models

Cons:

- Less control over the process

- It can be a “black box” solution

Best Practices for Hyperparameter Tuning

- Start Simple: Begin with default values and move to more complex tuning once you have a working model.

- Use Cross-Validation: Always use k-fold cross-validation to get reliable estimates of performance.

- Monitor Overfitting: Monitor the gap between training and validation performance.

- Scale Your Features: Essential for models sensitive to input scale (e.g., SVM, KNN).

- Leverage GPUs: If you’re working with deep learning models, use GPU acceleration for faster training.

- Track Experiments: Use tools like MLflow, Weights & Biases, or TensorBoard to track and compare different hyperparameter runs.

Hyperparameter Tuning in Different Algorithms

Here are a few examples of important hyperparameters for popular models:

- Random Forest: n_estimators, max_depth, min_samples_split

- SVM: C, kernel, gamma

- XGBoost: learning_rate, n_estimators, max_depth, subsample

- Neural Networks: learning_rate, batch_size, number of layers, dropout rate

Tools for Hyperparameter Optimisation

Several libraries simplify the process of hyperparameter tuning:

- Scikit-learn: GridSearchCV, RandomizedSearchCV

- Optuna: Lightweight and efficient, supports early stopping

- Hyperopt: Uses Bayesian optimisation

- Keras Tuner: For tuning Keras models

- Ray Tune: Scalable and supports distributed training

Conclusion

Hyperparameter optimisation is both an art and a science. It requires a strong understanding of the model, problem domain, and computational resources. By learning how to approach hyperparameter tuning systematically, you can unlock the full potential of your machine learning models.

As industries increasingly rely on AI and data-driven solutions, becoming proficient in tuning and optimising models makes you stand out in the competitive job market. Whether you’re dealing with structured data, images, or natural language, the ability to fine-tune your models will significantly enhance your impact as a data professional.

If you want to take your skills to the next level and master practical machine learning techniques, enrolling in a data scientist course in Hyderabad can be a great way to gain hands-on experience with hyperparameter tuning and other advanced methods. Such programs equip you with real-world projects, tools, and guidance from industry experts to help you become a job-ready data scientist.

ExcelR – Data Science, Data Analytics and Business Analyst Course Training in Hyderabad

Address: Cyber Towers, PHASE-2, 5th Floor, Quadrant-2, HITEC City, Hyderabad, Telangana 500081

Phone: 096321 56744